In today’s data-driven world, businesses need to process and analyze vast amounts of data in real time to stay competitive. This is where Apache Kafka comes into play. Kafka is a distributed streaming platform that has gained immense popularity for its ability to handle real-time data streams at scale. In this blog post, we’ll explore what Kafka is, why it’s important, and how it’s transforming the way organizations manage and process data.

Understanding Apache Kafka

At its core, Apache Kafka is a distributed messaging system that is designed for high throughput, fault tolerance, and real-time data streaming. Originally developed by LinkedIn, it was open-sourced and is now maintained by the Apache Software Foundation. Kafka uses a publish-subscribe model, where data is published by producers to topics, and consumers subscribe to those topics to receive and process the data.

Key Concepts

- Topics and Partitions: Data in Kafka is organized into topics, which can be further divided into partitions. Partitions allow for parallel processing of data and can be distributed across different Kafka brokers.

- Producers: These are responsible for publishing data to Kafka topics. Producers can publish data in real-time, making Kafka suitable for streaming applications.

- Consumers: Consumers subscribe to Kafka topics and process the messages. Kafka supports both real-time processing and batch processing of data.

- Brokers: Kafka brokers are the servers that store and manage the data. They are responsible for handling incoming messages, storing them in partitions, and serving messages to consumers.

Why Kafka Matters

Kafka has become a fundamental component of modern data architectures for several reasons:

- Real-Time Data Processing: Kafka excels at handling real-time data streams, making it invaluable for applications like real-time analytics, monitoring, and event-driven architectures.

- Scalability: Kafka is highly scalable. As data requirements grow, you can easily add more brokers or partitions to a Kafka cluster.

- Fault Tolerance: Kafka is designed to be fault-tolerant. It can handle data across multiple servers or clusters, ensuring data availability and reliability.

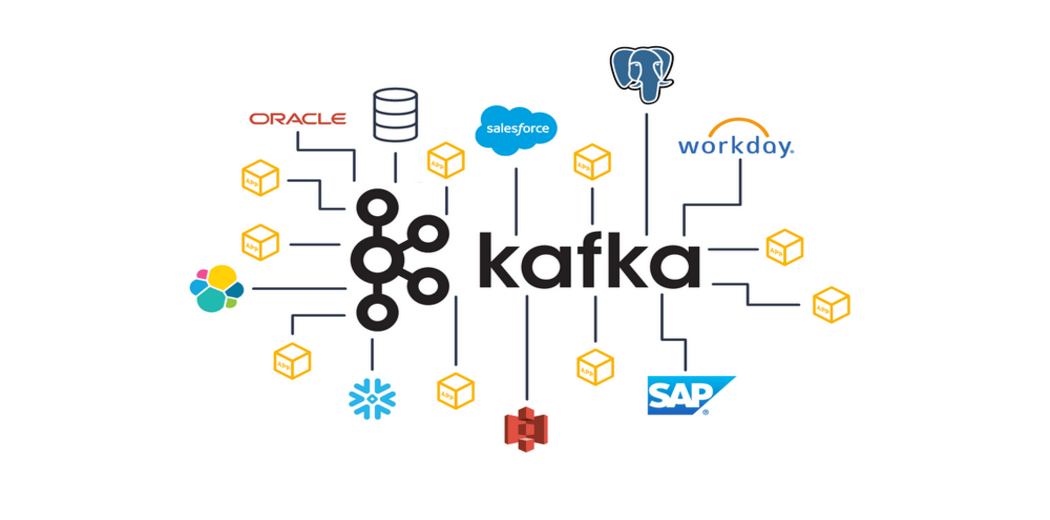

- Versatility: Kafka’s versatility is evident in its ability to integrate with various data sources and sinks through its ecosystem of connectors.

Use Cases

Kafka is used in a wide range of use cases, including:

- Log Aggregation: Centralizing logs from different services and applications for analysis.

- Event Sourcing: Capturing all changes to an application’s state as a sequence of immutable events.

- Real-Time Analytics: Enabling organizations to make data-driven decisions in real-time.

- Monitoring: Tracking system metrics and application performance in real-time.

- And More: Kafka’s flexibility makes it suitable for countless other scenarios.

Conclusion

Apache Kafka is revolutionizing the way organizations manage and process data. Its real-time capabilities, scalability, and fault tolerance make it a cornerstone of modern data architectures. As businesses strive to stay competitive in an increasingly data-driven world, Kafka’s role in enabling real-time data streaming is set to become even more critical. Embracing Kafka is not just a technological choice; it’s a strategic one for businesses looking to harness the power of data in real-time.

In our next blog post, we’ll delve deeper into Kafka’s ecosystem, exploring tools like Kafka Streams and ksqlDB for real-time stream processing. Stay tuned for more insights into the world of Kafka!